AutoDok Autonome Unfalldokumentation

01.07.2021

Motivation and objectives

The aim of the AutoDok project is to develop an air-based solution for automated police traffic accident recording, which is intended to support police forces in documenting and preserving evidence, particularly in the event of serious traffic accidents. The aim is to ensure that roads can be reopened as quickly as possible to reduce the risk of secondary accidents. This helps to increase the safety of citizens and the emergency services on the ground. The planned system will follow a fully integrated approach, i.e. all necessary subsystems such as UAV, ground station, processing and storage will be integrated into a mobile unit, a specially equipped accident recording and documentation vehicle. On the one hand, this facilitates field tests in real-life scenarios and, on the other hand, challenges and problems of an overall system can be identified and addressed in the pre-commercial phase.

The system developed in AutoDok is intended to be a functional demonstrator that can be used as easily as possible in real operations for accident documentation. Using automatically calculated flight patterns, it should autonomously fly over the operational area previously defined by the operator in a special pattern. In the control center, 3D models and true-to-scale orthophotos of the accident area are created from the sensor information and saved for further processing.

Results

Demonstrator drone und vehicle

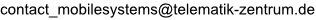

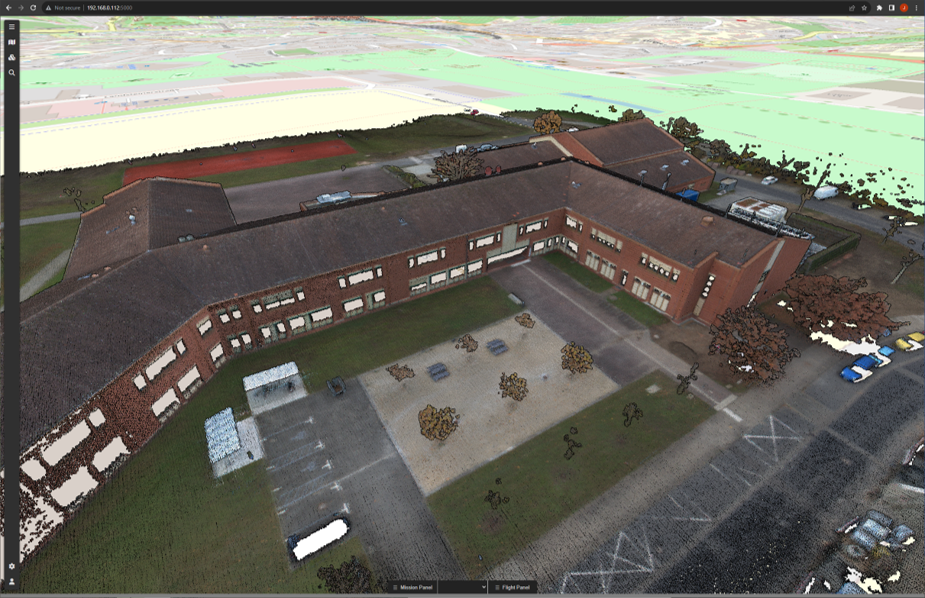

The system is operated simply and intuitively via the ground control station integrated into the vehicle. Technically, it consists of several monitors, a powerful PC system and the communication technology to the drone system. The associated software enables the planning and monitoring of the recording and flight on site. The data is transmitted during the flight and processed into a 3D model so that a comprehensive overview of the situation is immediately available.

The drone system is based on an M300 drone from DJI - enhanced with a sensor system with 3D laser scanner, camera systems, an onboard computer and components for power supply and communication. Thanks to the integrated drone control system, the entire mission from take-off and data acquisition to landing can be carried out autonomously. Remote control inputs are taken into account collaboratively during the ongoing autonomous flight, e.g. to make small corrections. Of course, drone control can still be taken over completely via the remote control, so that full manual intervention is possible at any time.

Ground station and communications

A specially adapted long-range WiFi was used for communication, which was extended to use the 5Ghz band by adapting the drivers. This achieves a maximum range of 1500m (2.4GHz) or 600m (50Ghz) at a data rate of 1MBit/s.

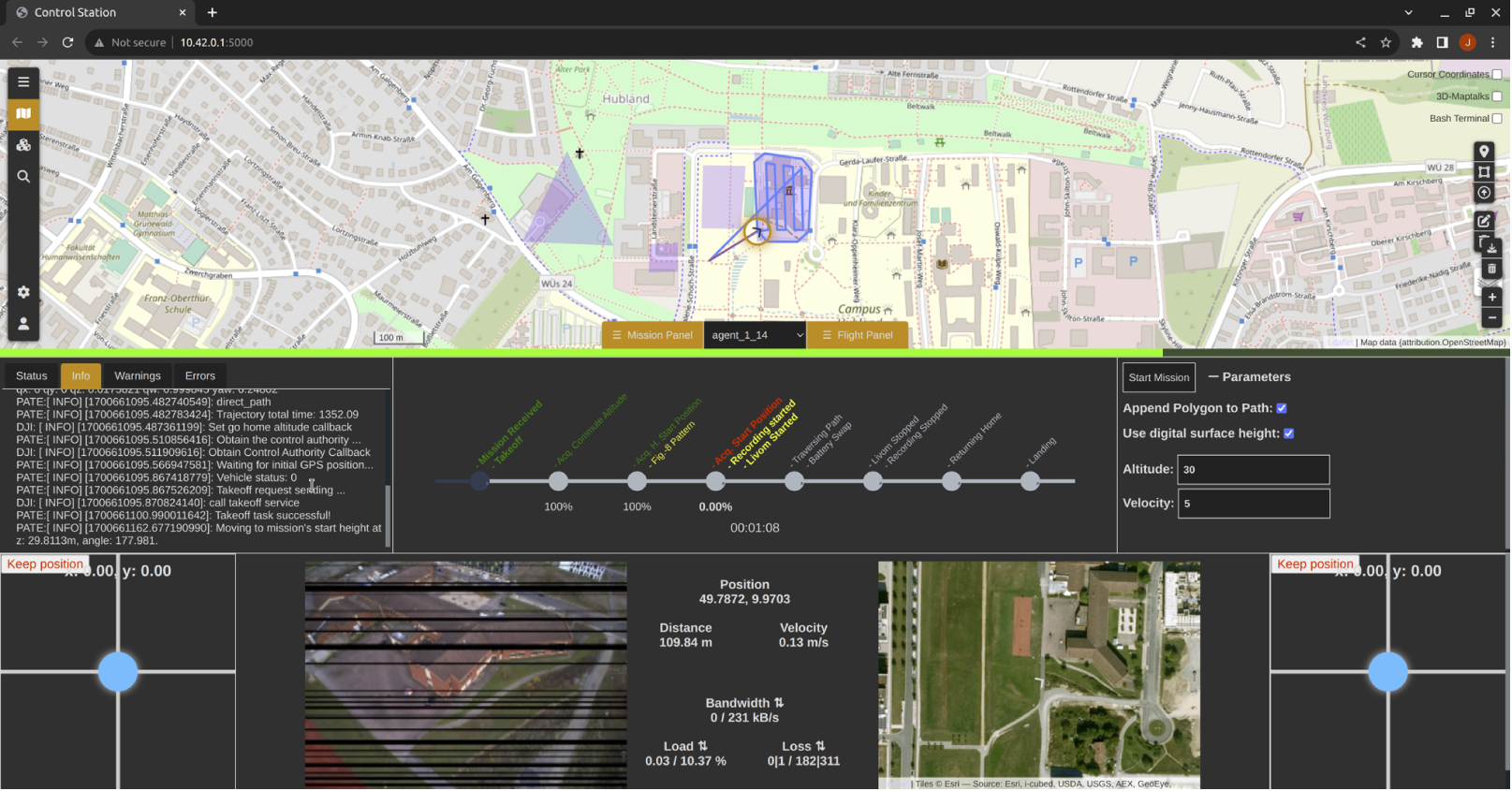

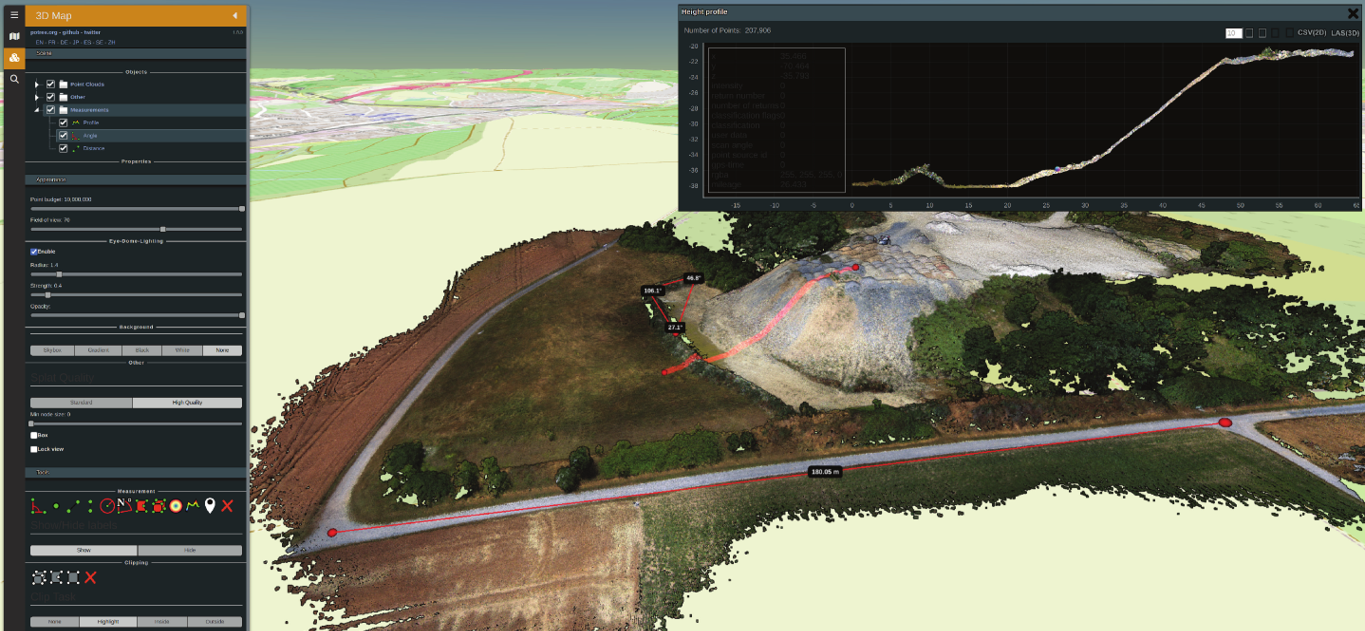

The ground station for mission planning and monitoring execution enables simple operation of the drone system. It allows interactive selection of the target area, automatically calculates optimized flight plans and coordinates the execution of the operation. The operator can track the status of the drone at any time and intervene. Parameters, information and constraints such as sensor coverage, height maps, multiple areas, restricted areas, geofences and much more are taken into account.

Autonomous flight

The quality of recording data and position determination is always susceptible to abrupt changes in the course of movement. Ideally, the drone should move forward at a continuous speed and carry out orientation changes on continuous curves that are as smooth as possible. The path planning and flight control software PATE (Path-Oriented Arial Trajectory Execution) was developed for this purpose. By generating and optimizing polynomial trajectories from the waypoints of the flight planning and dynamically creating and executing smooth joystick commands to control the drone. Additionally, it supports maintaining altitude over ground using LiDAR data, avoiding simple obstacles and replanning for extended obstacles.

Thanks to the integrated drone control, the entire mission from take-off and data acquisition to landing can be carried out autonomously. Remote control inputs are taken into account collaboratively during the ongoing autonomous flight, e.g. to make small corrections. Of course, drone control can still be taken over completely via the remote control, so that full manual intervention is possible at any time.

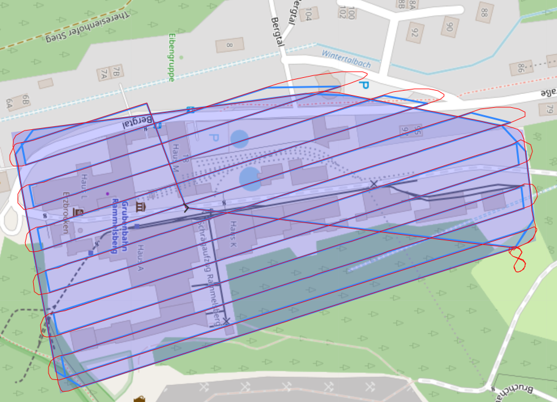

3D modeling und orthophotos

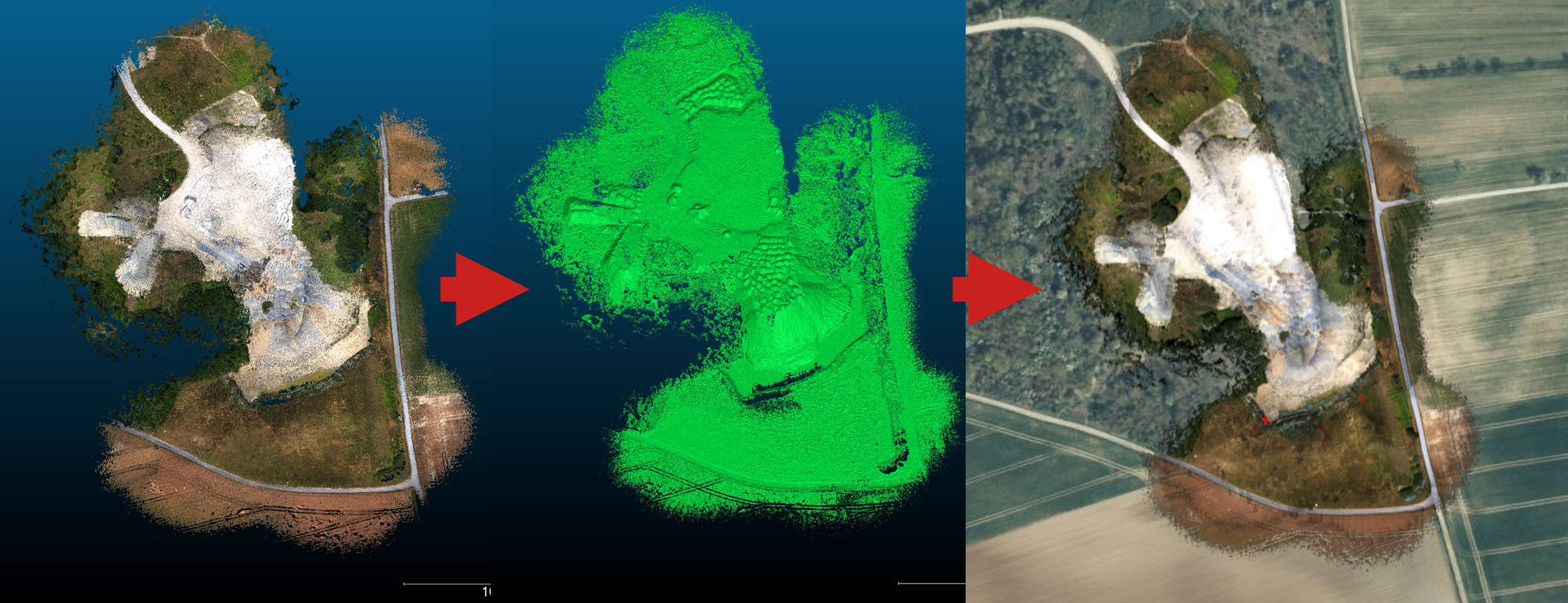

An adapted LiVOM algorithm (LIDAR-Inertial-Visual Odometry and Mapping) was used to register the LIDAR point clouds. In addition, various calibration methods for the camera and LIDAR were developed and implemented.

Rohdaten registrierte Punktwolke

To create orthophotos, a pipeline was developed that first generates a mesh from the registered point clouds and then renders an orthophoto from this mesh, which is finally also geolocalized (with selectable level of detail) and integrated into the ground station.

Project duration

7/2021 – 12/2023

Consortium

- Zentrum für Telematik e.V., Würzburg

- Hensel Fahrzeugbau GmbH & Co. KG, Saarbrücken, Waldbrunn

- Polizeidirektion Lüneburg

Funding

This project is funded by Bundesministerium für Bildung und Forschung as part of program Forschung für die zivile Sicherheit

Bekanntmachung: “Anwender - Innovativ: Forschung für die zivile Sicherheit II”

Contact

E-Mail: